Optimum是huggingface transformers库的一个扩展包,用来提升模型在指定硬件上的训练和推理性能。该库文档地址为 Optimum。

基于Optimum,用户在不需要学习过多的API基础上,就可以提高模型训练和推理性能(亲测有效)。

可以使用如下命令进行安装:

python -m pip install optimum

#!pip install optimum如果向安装指定硬件的optimum,可以参考如下表格:

| 硬件 | 安装命令 |

|---|---|

| ONNX runtime | python -m pip install optimum[onnxruntime] |

| Intel Neural Compressor (INC) | python -m pip install optimum[neural-compressor] |

| Intel OpenVINO | python -m pip install optimum[openvino,nncf] |

| Graphcore IPU | python -m pip install optimum[graphcore] |

| Habana Gaudi Processor (HPU) | python -m pip install optimum[habana] |

如果想安装最新版(源码安装),使用如下命令:

python -m pip install git+https://github.com/huggingface/optimum.git 如果源码安装需要指定硬件,那么需要添加#egg=optimum[accelerator_type],如下命令:

python -m pip install git+https://github.com/huggingface/optimum.git#egg=optimum[onnxruntime] 如果是国内安装的话,记得加上-i https://pypi.tuna.tsinghua.edu.cn/simple。

本节主要是通过快速使用optimum完成训练和推理,初步的体验下该库的魅力!如果需要深入理解这个库如何使用,需要深入具体的代码进行step in阅读。

使用onnxruntime加速推理,optimum通过配置对象来定义图优化和量化的参数,这些对象将被用来初始化各种优化器和量化器。

在进行量化或优化之前,我们首先需要加载模型。为了使用ONNX runtime进行加速推理,我们只需要将前缀为AutoModelForXxx的类替换为ORTModelForXxx即可。如果是从pytorch模型上加载,则需要在`from_pretrained函数中指定参数from_transformers=True,具体代码如下:

from optimum.onnxruntime import ORTModelForSequenceClassification

from transformers import AutoTokenizer

model_checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

save_directory = "tmp/onnx/"

# Load a model from transformers and export it to ONNX

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

ort_model = ORTModelForSequenceClassification.from_pretrained(model_checkpoint, from_transformers=True)

# Save the ONNX model and tokenizer

ort_model.save_pretrained(save_directory)

tokenizer.save_pretrained(save_directory)进行量化也很方便,代码如下:

from optimum.onnxruntime.configuration import AutoQuantizationConfig

from optimum.onnxruntime import ORTQuantizer

# Define the quantization methodology

qconfig = AutoQuantizationConfig.arm64(is_static=False, per_channel=False)

quantizer = ORTQuantizer.from_pretrained(ort_model)

# Apply dynamic quantization on the model

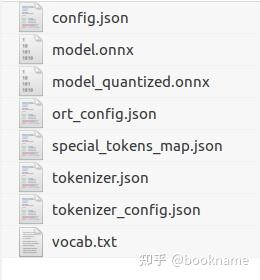

quantizer.quantize(save_dir=save_directory, quantization_config=qconfig)上述代码运行后,会在`save_directory`上创建onnx及其量化的模型,文件列表如下图所示:

上图中的model.onnx是pytorch转为onnx模型文件,model_quantized.onnx是量化后的模型文件。

对比下transformers模型和onnx量化后模型运行速度对比。代码如下:

save_directory = "tmp/onnx/"

model_checkpoint = "/pretrained_weights/distilbert-base-uncased-finetuned-sst-2-english"

from transformers import AutoModelForSequenceClassification

from transformers import pipeline, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained(model_checkpoint)

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

cls_pipeline = pipeline("text-classification", model=model, tokenizer=tokenizer)

# 下面一行单独开一个cell运行

%timeit results = cls_pipeline("I love this story, are you like it?")

## 15.7 ms ± 522 μs per loop (mean ± std. dev. of 7 runs, 100 loops each),不同硬件的运行结果会有不同上面是transformers的运行结果,下面看下onnx量化模型的运行速度:

model = ORTModelForSequenceClassification.from_pretrained(save_directory, file_name="model_quantized.onnx")

tokenizer = AutoTokenizer.from_pretrained(save_directory)

cls_pipeline = pipeline("text-classification", model=model, tokenizer=tokenizer)

results = cls_pipeline("I love burritos!")

# 下面一行单独开一个cell运行

%timeit results = cls_pipeline("I love this story, are you like it?")

## 4.2 ms ± 281 μs per loop (mean ± std. dev. of 7 runs, 100 loops each)4.2ms对15.7ms,确实速度快了很多!棒!!!

使用onnx runtime来加速训练,需要使用ORTTrainer 代替transformers的Trainer,代码如下所示:

- from transformers import Trainer, TrainingArguments

+ from optimum.onnxruntime import ORTTrainer, ORTTrainingArguments

# Download a pretrained model from the Hub

model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased")

# Define the training arguments

- training_args = TrainingArguments(

+ training_args = ORTTrainingArguments(

output_dir="path/to/save/folder/",

optim="adamw_ort_fused",

...

)

# Create a ONNX Runtime Trainer

- trainer = Trainer(

+ trainer = ORTTrainer(

model=model,

args=training_args,

train_dataset=train_dataset,

+ feature="sequence-classification", # The model type to export to ONNX

...

)

# Use ONNX Runtime for training!

trainer.train()还没来及测试,目前还是喜欢使用原始的训练方式!嘿嘿,有空试试!

某某自来水业务系统,是一套适合各种规模自来水公司的网络版自来水多种类业务管理软件。根据各大自来水公司存在的问题和需求自主...

某某自来水业务系统,是一套适合各种规模自来水公司的网络版自来水多种类业务管理软件。根据各大自来水公司存在的问题和需求自主...

某某自来水业务系统,是一套适合各种规模自来水公司的网络版自来水多种类业务管理软件。根据各大自来水公司存在的问题和需求自主...

某某自来水业务系统,是一套适合各种规模自来水公司的网络版自来水多种类业务管理软件。根据各大自来水公司存在的问题和需求自主...